Introduction to Intel® RealSense™ Touchless Control Software

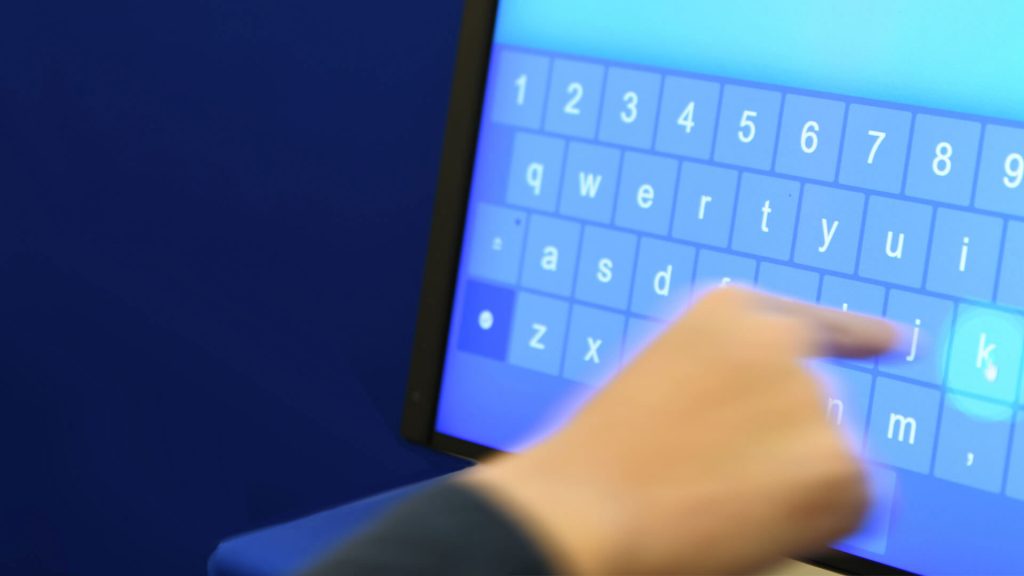

The Intel® RealSense™ Touchless Control Software (TCS) was developed to make almost any computer screen, flat surface or monitor “touchless” enabled, allowing users to interact directly with screens or surfaces without physically touching them, as shown in figure 1. The main motivation is to minimize shared physical contact with a public screen. This may be desirable for touchscreens in airports, ATMs, checkout counters, digital signage, hotels, or hospitals, in order to help reduce the transmission of germs or viruses. It may also be required in environments where touching screens would be prohibited entirely, such as in industrial kitchens or workshops where user’s fingers may be dirty or wet.

Figure 1. Example of interacting with a screen without physically touching it.

The TCS Application was designed to enable touchless interactions with screens ranging in size from small to large, in both portrait or landscape modes, and even for sub-regions of screens. The 3 simple goals of TCS are to enable:

- Quick & Easy HW & SW installation

- Support of existing user interfaces

- Zero learning curve for customers

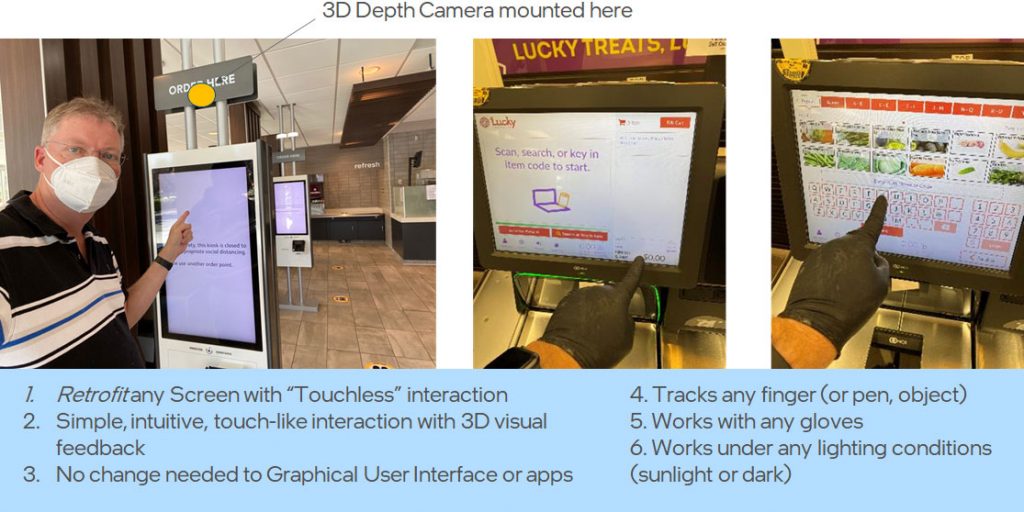

The first two points are related to simple installation and easy deployment with the ability to retro-fit existing screens. Once the camera is positioned correctly above the screen and plugged into the USB 3 port of the host, the SW install can be completed in about a minute. Since the TCS application runs in the background and acts as a new touch input device, it will enable touch on any existing application, be it a fast-food menu, web-browser, or desktop interface.

Goal 3 is extremely important as it focuses on allowing a zero-learning-curve experience for customers using the screen. To achieve this, we sought to enable touchless interactions that did not require users to learn any new “gesture language”. Any user familiar with normal touch screens should be able to use the same app in “touchless” fashion, immediately, without any instructional signage, explanation or guidance. Unlike personal smart phones, where users do have plenty of time to become familiar with many new and changing gesture shortcuts (such as swipe, pinch, multi-finger interactions, double taps, swipes from different parts of screen etc.), the design guidance was that even if users only have the opportunity to interact with these “touchless” screens once a year, they would still be expected to be operate them fast and easily, for example for airline check-in or fast-food selection. This does not mean that these short-cuts cannot technically be enabled in the future, but that they fall outside the initial design goals.

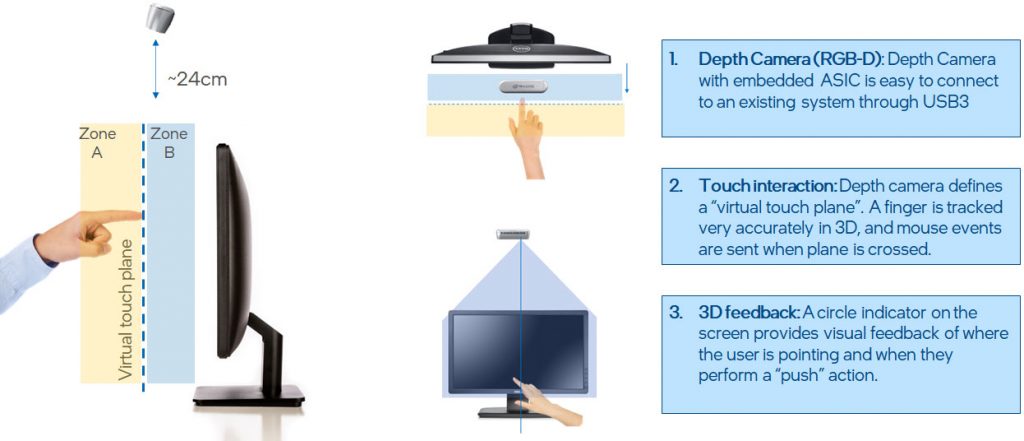

Figure 2. A simple “touchless” user interaction, in which a virtual touch screen hovers slightly above the real screen. When the user’s finger touches the virtual touch plane, touch-events are sent to the system. In the recommended configuration, the Intel RealSense camera is mounted above the screen to provide full visual coverage of the volume in front of the screen.

Figure 2 shows the very simple operating principle, based on the concept of introducing a “virtual plane” that hovers above the real screen by a few inches, in a one-to-one scale. As a user brings a finger close to the screen, the system will immediately detect its presence and track the finger in 3D. When the finger enters the pre-defined engagement Zone A, a cursor will appear on screen in nominally the correct X-Y location of the screen (ergo the one-to-one scale mapping). As the finger moves closer to the screen, the cursor icon will change in size to provide visual feedback to the user on the distance of their finger away from the “virtual touch plane”. This visual feedback is key to mitigating confusion stemming from optical parallax – as the finger will be hovering in the air above the screen, it could appear to the user to be above different parts of the screen depending on their viewing angle. The 3D visual feedback removes any ambiguity.

Upon “touching” the virtual-touch plane, a touch event (or “mouse click”) is sent to the system, and auditory feedback is provided in the form of a “click sound”, for example. The cursor color is then changed (while in the blue Zone B), and the user needs to withdraw their finger again back past the virtual touch plane before they will be able to reactivate new selections. Care was also taken to eliminate false clicks when moving the finger quickly laterally across the screen. This was done by only permitting “clicking” when the Z-speed (in-out from screen) is much larger than the finger speed across the X-Y plane of the screen. The pointing is also stabilized in order to help anyone who has shaky hands. The tracking is extremely fast with low latency. This is achieved because most of the complex optical tracking is performed inside the embedded compute on the camera, which calculates a dense 3D depth map of the volume in front at a high frame rate. This also means that the compute load on the host PC is almost negligible, and the only main requirement on the host is that it has a USB3 connector.

It should be noted that the solution we provide is not specific to “finger” tracking. This means that pens or other pointing devices can be used as well. Furthermore, it is important to note that care was taken in system design to make sure that fingers can be tracked under any lighting conditions (in complete darkness to bright outdoor sunlight), tracked during fast motion, and even when wearing black featureless gloves, that may cause some optical systems some difficulty.

For a detailed overview of all the features of TCS and the installation instructions, we refer to a separate document “Intel RealSense Touchless Control Software Quick Guide”. It covers the setup of the hardware, the installation of the Windows software, and instructions on how to use the software. It also describes different ways to calibrate to different sized screens or setups, as well as all the different options and settings that can be selected.

As a last comment, we note that adding an Intel RealSense Camera to a system, has value that can extend beyond TCS usages. The Intel RealSense depth camera D435 includes a 3D depth sensor, an Infrared camera, and a color camera. All these sensors can be accessed through the Intel RealSense open-source SDK directly, and can be leveraged to unlock many additional computer vision usages, including but not limited to user proximity detection, full gesture recognition, object detection, emotion detection, and much more.

Subscribe here to get blog and news updates.

You may also be interested in

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens

A huge variety of package shapes, sizes, weights and colors pass through today’s e-commerce fulfilment or warehouse distribution centers. Using