Search results for: š Is Ivermectin Safe For Children š° www.Ivermectin4Sale.com š° Can You Give Dogs Ivermectin š Ivermectin Dosage For Dogs Intestinal Parasites , Cost-effectiveness Of Ivermectin

Today, professional sports is one of the largest industries. One game, one team, one player, one moment can have millions of dollars on the line. Those moments are moments of uncertainty. Can we ever know what is going to happen in the future? Can we know which player to draft, which one will consistently perform?

Read MoreHow-To: A Simple Way to 3D Scan an Environment

May 27, 2020In this How-To, we are going to share a simple way to 3D scan an environment that you can then visit and share with friends and family. This process will involve multiple steps and pieces of software, but should be relatively easy to follow. What you will need: IntelĀ® RealSenseā¢ Depth Camera D415, D435, D435i…

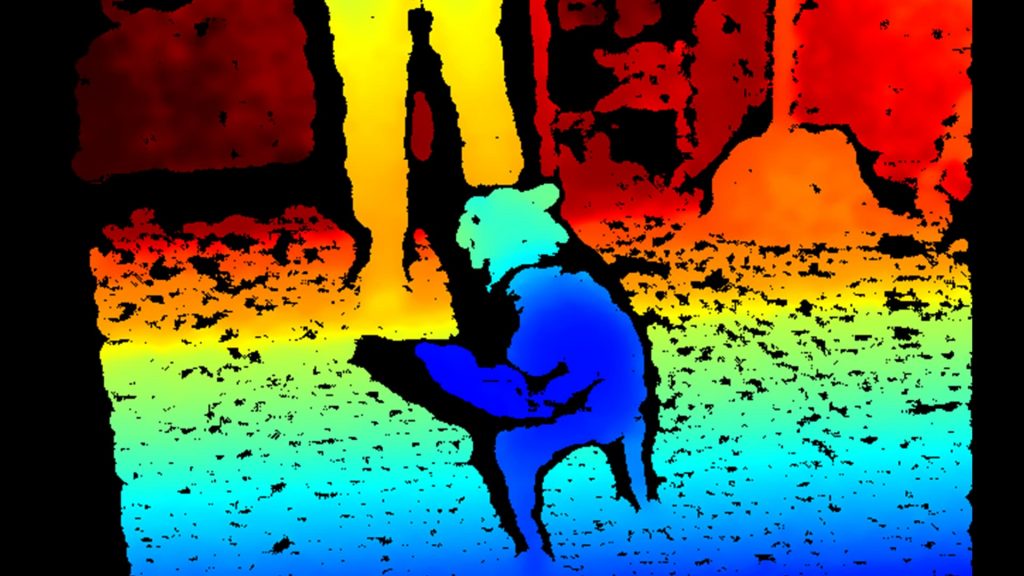

Read MoreBeginner’s guide to depth (Updated)

July 15, 2019We talk a lot about depth technologies on the IntelĀ® RealSenseā¢ blog, for what should be fairly obvious reasons, but often with the assumption that the readers here know what depth cameras are, understand some of the differences between types, or have some idea of what itās possible to do with a depth camera. This…

Read MoreComputer vision in Robotics : Autonomous Mobile Robots, Robotic Arms and articulated robots, Humanoid robots and more

July 28, 2021Computer vision in robotics Download A revolution in how robots see the world. Lightweight, low power and easyātoāuse depth cameras that give robots the ability to navigate landscapes, avoid obstacles and recognize objects, people and more. The world of computer vision in robotics comes into focus. Image Scroll When robots can see, measure, analyze, and respond to their environment, a whole new world of possibilities…

Read MoreCase study: Visual-Inertial Tracking

February 22, 2019Intel RealSense Tracking Camera T265 Buy Case study Robust Visual-Inertial Tracking from a Camera that Knows Where itās Going The IntelĀ® RealSenseā¢ Tracking Camera T265, powered by the IntelĀ® Movidiusā¢ Myriadā¢ 2 vision processing unit (VPU), delivers 6DoF, insideāout tracking for highly accurate, GPS-independent guidance and navigation for robots and other mobilityāfocusedĀ implementations. Gartner identifies āautonomous thingsā as Trend Number 1…

Read MoreHow robotics is the next big thing

April 15, 2020In a rapidly changing world, itās hard to predict where technology will gain traction in different markets and when. Itās worth taking a look at those areas of robotics and automation that are likely to grow in the next few years, in part due to increased demand but also the increasing advances in robotics capabilities.…

Read MoreIntelĀ® RealSenseā¢ tracking camera T265 and depth cameras D400 series ā Better together

June 7, 2019While many people are familiar with IntelĀ® RealSenseā¢ technology as a producer of high-quality depth cameras, the introduction of the IntelĀ® RealSenseā¢ Tracking Camera T265 has created a lot of questions about the difference between our tracking and depth solutions, and the applications for both. With a long history of leadership in the depth camera…

Read More