Gartner identifies “autonomous things” as Trend Number 1 in its list of Top 10 Strategic Technology Trends for 2019.+ A critical aspect to making robots, drones, and other mobile devices autonomous is their ability to interact independently and intelligently with their environments.

Autonomy depends fundamentally on the devices being able to track their locations as they move through space. Based on required tracking, devices must be able to navigate successfully through unfamiliar spaces while discovering, monitoring, and avoiding still and moving obstacles in real time. The mechanisms that enable autonomous tracking must be highly accurate, while operating at low power and being flexible enough to integrate with the diverse needs of systems being developed in the market.

The Intel® RealSense™ Tracking Camera T265 enables solutions to these challenges as a stand-alone, six-degrees-of-freedom (6Dof) inside-out tracking sensor. The device fuses inputs from multiple sensors and offloads image processing and computer vision from the host system to provide highly accurate, real-time position tracking with low latency and low power consumption.

The Intel RealSense Tracking Camera T265 is designed for flexibility of implementation and to work well on small-footprint mobile devices such as lightweight robots and drones. It is also optimized for small-scale computers such as NUCs or Raspberry Pi* devices, and for connectivity with devices such as mobile phones or augmented reality headsets. With the introduction of the Intel RealSense Tracking Camera T265, the robotics industry for the first time has the benefit of tracking performed entirely with embedded compute, which is handled by the Intel® Movidius™ Myriad™ 2 VPU at an affordable price point.

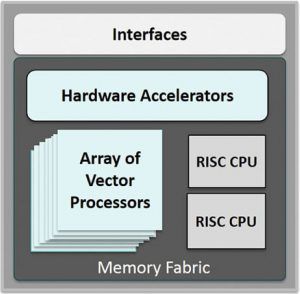

The Intel Movidius Myriad 2 VPU is a system-on-chip component that is purpose-built for image processing and computer vision at very high performance per watt, including for space-constrained implementations. Its key architectural features are the following:

• Vector processor cores are optimized for machine vision workloads.

• Hardware accelerators increase throughput for imaging and computer vision.

• General-purpose RISC CPU cores coordinate and direct workloads and interaction with external systems.

The Intel® Movidius™ Myriad™ 2 VPU.

The Intel® Movidius™ Myriad™ 2 VPU.

The Intel RealSense Tracking Camera T265’s spatial sensing and tracking capabilities are based on technology developed by RealityCap, which was acquired by Intel in 2015.

An Integrated Peripheral for Autonomous Location Tracking

The Intel RealSense Tracking Camera T265 is roughly 1 x .5 x 4 inches (108 mm x 24.5 mm x 12.5 mm) in size, weighs around two ounces (55 g), and draws just 1.5 watts to operate the entire system, including the cameras, IMU, and VPU. Combined with the powerful compute capabilities of the Intel Movidius Myriad 2 VPU, the platform delivers high performance per watt, for long battery life in mobile robots and other portable systems.

Low system-power consumption is valuable in multiple ways for enabling better user experience through the physical design of devices. In addition to long battery life, physically smaller batteries help reduce the overall weight of devices, including systems that a user might carry or wear for long periods of time. In some cases, it may also remove the need for cumbersome external batteries connected to devices by means of cables.

Sensor Fusion for Re‑Localization and Occupancy Mapping

The Intel RealSense Tracking Camera performs inside-out tracking, meaning that it does not depend on external sensors for its understanding of the environment. The tracking is based primarily on information gathered from two onboard fish-eye cameras, each with approximately a 163-degree range of view (±5 degrees) and performing image capture at 30 frames per second. The wide field of view from each camera sensor helps keep points of reference visible to the system for a relatively long time, even if the platform is moving quickly through space.

A key strength of visual-inertial odometry is that the various sensors available complement each other. The images from the visual sensors are supplemented by data from an onboard inertial measurement unit (IMU), which includes a gyroscope and accelerometer. The aggregated data from these sensors is fed into simultaneous localization and mapping (SLAM) algorithms running on the Intel Movidius Myriad 2 VPU for visual-inertial odometry.

![]() Block diagram of the Intel® RealSense™ Tracking Camera T265.

Block diagram of the Intel® RealSense™ Tracking Camera T265.

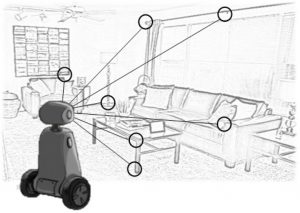

The SLAM algorithm identifies sets of salient features in the environment, such as a corner of a room or object that can be recognized over time to infer the device’s changing position relative to those points. This approach is similar in practice to how ocean-going ships in pre-modern times used constellations of stars to navigate.

Together, the combination of sensors provides higher accuracy than would be possible using just one type. The visual information also prevents long-term drift, or the accumulation of small errors in navigation calculations over time, which would cause inaccuracy of position information. The IMU operates at a higher frequency than the cameras, allowing for quicker response and recognition by the algorithm to changes in the device’s position.

The peripheral also provides support for additional sensors if needed, including a wheel odometer for wheeled robots as well as cliff or bump sensors. Inputs from the wheel odometer can be fed into the SLAM algorithms running on the VPU for additional flexibility and robustness in providing position information.

Note: When a wheel odometer is used, it requires centimeter-accurate calibration with the Intel® RealSense™ Tracking Camera T265. This calibration is customer-generated using a provided example and instructions

Visual-Inertial Odometry and Re‑Localization

By comparing the images from each of the two cameras and calculating the differences between the two, the camera can infer the distance to a salient feature being used as a reference point by the algorithm. Specifically, a greater difference between the positions of the feature as viewed by each of the two cameras corresponds to a greater distance to the feature. These estimates are then refined by being observed over subsequent camera frames, with additional information from the IMU added for even better estimation. A map of visual features and their positions is built up over time. This is visual-inertial odometry.

Visual-inertial re-localization, using visual points of reference to infer position.

Re-localization is the ability of the camera to use the features it has seen before to recognize when it has returned to a familiar place. For example, if a drone makes an aerial loop and then returns to its original position, the algorithm is able to compare the visual environment against its database of salient features and their positions in space to re-localize. The camera is able to discern its position against that static frame of reference, locating its point of origin with an error margin of less than one percent.

Testing onboard a drone both in daytime and night conditions demonstrated that, in both cases, the tracking and position data generated by the peripheral was closely correlated with what was provided by GPS. This result supports the viability of using the peripheral as the means for navigation in areas where GPS is not available, such as under a bridge or inside an industrial structure.

Occupancy Mapping

The Intel RealSense Tracking Camera T265 in combination with a depth camera and cliff sensors enables wheeled or aerial robots to guide themselves with true autonomy through unfamiliar environments. In such a space, the robot would discover its surroundings—including obstacles—and represent them in a 2D or 3D grid where each cell is identified as one of three values: occupied, unoccupied, or unknown.

Using such an occupancy map, the robot can calculate potential unobstructed paths that it can take toward goals, as the basis for self-navigation. The T265 ‘s position and orientation information enables the integration of depth and cliff data into a dynamically updating occupancy map of its environment in real time, using only a trivial amount of host compute. The dynamic nature of the maps enables the robot to avoid collisions with moving obstacles, including humans.

Dynamically generated occupancy map of 2D space.

Dynamically generated occupancy map of 2D space.

Note: Occupancy maps generated using the Intel® RealSense™ Tracking Camera T265 can be registered to T265’s re‑localization maps, which facilitates sharing across devices and systems.

Host Connectivity and Integration into Devices

Because the Intel Movidius Myriad 2 VPU performs all of the processing necessary for tracking, no additional computation is required for visual tracking on the host. Therefore, the host can be dedicated entirely to controlling other functions, such as actual movement of the device. The T265’s independence from processing resources on other onboard systems makes it simpler to implement, especially into existing designs for robots, drones, and other devices.

The T265 does utilize nonvolatile storage on the host to store the camera’s firmware, and a software client provided by Intel loads it from the host at boot time. While this factor is transparent to the end user, it reduces the complexity of keeping the firmware current.

The algorithm’s ability to make highly efficient use of the hardware is largely due to its separation of work between the VPU’s two CPU cores and the 12 SHAVE (Streaming Hybrid Architecture Vector Engine) cores. The Intel RealSense technology team has optimized the placement of these workloads within the VPU to reduce the power consumed and accelerate the algorithm.

Development with the librealsense Library

Intel maintains the librealsense project, which provides a cross-platform library for the capture of data from Intel® RealSense™ cameras. The project includes tracking and re-localization APIs that facilitate programmatic control of those functionalities for developers. In addition, librealsense is available as an integrated part of developer kits that also include the hardware needed to utilize the library.

The fact that the full algorithm runs on the Intel Movidius Myriad 2 VPU abstracts away complexity for tracking applications, simplifying overall implementation. In addition, because the visual-inertial sensor-fusion solution is implemented entirely on the camera, it supports connectivity to a broad range of hosts, using USB2 or USB3.

The host may run Microsoft Windows* or Linux*, as long as it has sufficient persistent memory to store the camera firmware (approximately 40 MB). Data from external sensors such as wheel odometers is passed through the USB connection from the host to the camera. The Intel RealSense technology team also plans to make a Robot Operating System (ROS) node available, offering even greater extensibility for the broader robotics community.

“Enabling the Intel RealSense Tracking Camera to deliver rich visual intelligence, at low power, in a small footprint is exactly the kind of thing we built the Intel® Movidius™ Myriad™ 2 VPU for.”

– Gary Brown, AI Marketing, Intel Internet of Things Group

Conclusion

New ground has been broken for visual-inertial tracking with high performance, low power consumption, and low cost. The Intel RealSense Tracking Camera T265 facilitates accurate self-guidance for robots and other devices across a variety of use cases, such as the following:

• Commercial robots, including mobile devices that can perform menial, difficult, or dangerous tasks in settings such as retail locations, warehouses, and e-commerce fulfillment centers.

• Consumer robots, including attendants or assistants for humans as well as sophisticated autonomous appliances, wheelchairs, and vehicles.

• Augmented reality applications that use tracking to facilitate the overlay of external data on the user’s field of vision for everything from industrial information to entertainment.

• Drone guidance for the avoidance of still or moving obstacles, as well as navigation in areas where GPS may be unavailable, such as under a bridge or inside a structure.

The Intel RealSense Tracking Camera T265 is a highly capable building block that provides robust spatial tracking for companies to build their unique intellectual property on top of. An emerging generation of autonomous devices will re-invent the category, with a rich new set of opportunities for the industry.

Intel® RealSense™ Technology

Intel® Movidius™ Technology

+ https://www.gartner.com/smarterwithgartner/gartner-top-10-strategic-technology-trends-for-2019/

1. Under 1% drift observed in repeated testing in multiple use cases and environments. AR/VR use cases were tested with the T265 mounted on the head in indoor living and office areas with typical indoor lighting including sunlight entering the room. Wheeled robot use cases tested with wheel odometer data integrated, again in indoor office and home environments.

2. Sufficient visibility of static tracked visual features is required, the device will not work in smoke, fog, or other conditions where the camera is unable to observe visual reference points.

Intel, Intel Movidius and Intel RealSense are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries.

*Other names and brands may be claimed as the property of others.

© 2019 Intel Corporation. All rights reserved. 0119/MB/MESH 338337-001US

Subscribe here to receive updates about our latest blog posts and other news.