The Beginner’s Guide to 3D Film Technologies

by Suzanne Leibrick, Intel® RealSense™ Technology Specialist

What is it?

With growing interest in new immersive entertainment platforms like Virtual and Augmented reality, 3D photos on Facebook, 360° videos on YouTube and more, it can be hard to understand the nuances and differences between all of these different photo and video technologies, and even harder to know where to start if you’re someone who wants to create something new.

360° Photos and Videos

Let’s start with one that’s been around for a couple of years now. 360° (and 180°) photos and videos capture a scene from a single point in space, but do so in a way that allows them to capture the entire scene around that single point. You can think of this as an extension of panoramic photos – instead of just capturing an image of a large stretch of landscape, a 360° photo captures the whole landscape, including the sky and ground. You can capture 360° photos (also known as photospheres) using your phone and various different applications like Google Street View.

360° videos require the use of a special camera or camera setup like a multiple Go-Pro rig so that all of the image is in-frame at a time. After capturing 360° film, it is edited in similar ways to traditional film, though any setup with multiple cameras does sometimes require editing so that seams (or stitch lines) between the cameras are invisible. There are also additional issues with 360 – when there’s no such place as behind the scenes, you may have to find a creative place to hide equipment and crew while shooting.

There are many ways to view 360° films and videos – from social media and YouTube, to various virtual reality platforms. The biggest limitation of this medium is that you are limited in your ability to control or move around the scene. You are limited to wherever the film-maker decided to place the camera. You can look around, but only as much as you would be able to move around in a swivel chair bolted to the floor – something that is inherently limiting the level of immersion a viewer can feel.

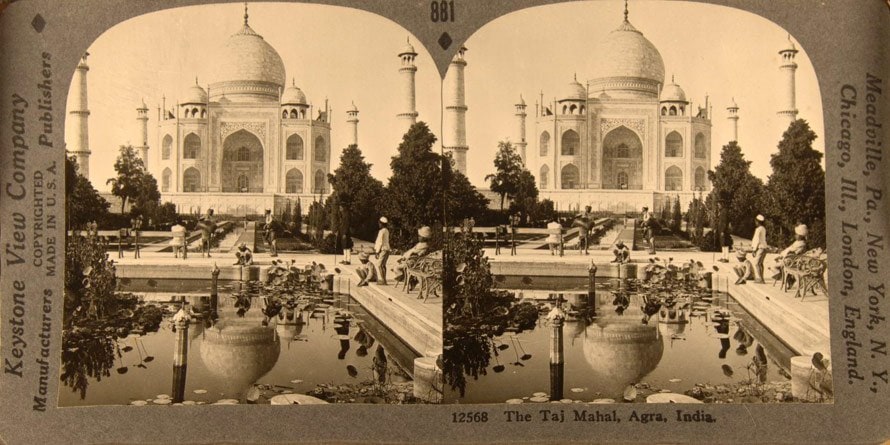

Stereoscopy

Stereoscopy refers to technologies that mimic how human vision works to create depth perception in a scene. We perceive depth with a variety of visual cues and information, but a primary part of how we sense depth is our brains processing the information from two eyes – since they are at two different positions, near objects can appear in vastly different positions to each eye, where far objects appear to be in virtually the same spot. You can try this concept out very easily – hold up a finger close to your face, and alternate eyes – the finger appears to move. If you move it further away, it appears to move much less. Our brains synthesize this information and use it to help us determine distance and help us perceive the 3D world.

Source: lookingglassvr.com

Source: lookingglassvr.com

Stereoscopic technology has existed for quite some time, the basic concept being to use two cameras, positioned roughly human-eye distance apart to film or photograph a scene, and then for those images to be viewed in a way that presents each of those images to your eyes separately. If you have access to a Oculus Go, Samsung Gear VR or Google Cardboard headset, you can view some digital versions of very early stereoscopic photos using the Looking Glass VR application.

3D movies work the same way – they’re filmed using a special camera that records each eye-image simultaneously (or those same images are generated digitally). When you put on a pair of 3D glasses in the movie theater, the lenses are polarized in such a way that each of your eyes sees only what it is supposed to, to give you the illusion of 3D objects in front of your face.

Source: lookingglassvr.com

Source: lookingglassvr.com

Some 360° cameras are also stereoscopic, giving objects in the scene more depth, but your ability to move, or move the camera in the space is still limited – Stereoscopy is the illusion of 3D, not true 3D. Facebook’s recent foray into 3D photos is also a stereoscopic solution – the phones which support creating compatible photos are using the data from dual cameras to create the depth illusion. In the case of the iPhone X, they can also use the data from the front-facing structured light depth camera to determine foreground and background objects.

The Intel® RealSense™ Depth Cameras D400 series also operate on similar principles – by using two sensors, depth is calculated on a per-pixel basis for an entire image. The D400 series are stereo depth cameras that use the disparities between the images, along with additional infrared information, to build a highly accurate depth map of any scene.

Volumetric Capture and Film

We have discussed Volumetric Capture here previously – discussing our showcase at the 2018 Sundance Film Festival, but it’s worth going into further and talking about what exactly it is, and more importantly, how you might get started.

Similar to 3D scanning, Volumetric Capture involves capturing objects and people in their entirety, with both depth and from every angle. The difference here is that while 3D scanning (and the related Photogrammetry) is static, and can be done using just one camera moving around a space or object, Volumetric Film is video, so objects and people have to be captured in real time. This means that many cameras need to be used – placed all around the subjects of the film, to capture them from every angle.

Intel Studios

Intel Studios

Intel Studios is an example of a Volumetric Capture dome. At 10,000 square feet, it’s the world’s largest. It uses up to 96 high resolution digital video cameras spaced around the dome to capture everything within the space. Once the images are captured, they are processed by some heavy duty servers and special software to turn the feed from all these individual cameras into something that is truly 3D. What that means is that volumetric films can be viewed at any time from any angle. Remember the revolutionary bullet time effects in The Matrix? Because they used many cameras to film those shots, they could move the point of view of the viewer around at will. With more advanced technology today, not only can we render out volumetric video from any angle, but we can display that same content in a way that allows the viewer to navigate their way around a scene at will – whether that’s using a mobile phone for Augmented Reality, playing back inside a Virtual Reality headset, or using depth cameras placed on digital screens to allow you to navigate via gestures. Check out the volumetric capture experience created by Intel Studios that debuted at CES 2019. You can also view the behind the scenes video below.

Volumetric Capture with Intel RealSense Technology

The second way you can create Volumetric Film content is using depth cameras placed around a scene. Depending on the size of the scene or person you are trying to capture, as few as 6-10 cameras can give good results. The addition of depth information means that you don’t need as many cameras as you would if you were using traditional 2D cameras. It’s also possible to generate a preview of captured data much more quickly since less post processing is necessary.

Using Intel RealSense Depth Cameras for Volumetric Capture does require a few different things – the cameras must be positioned around the subject or space so that everything is within view of at least one camera – you can only capture what is within the line of sight of at least one camera. Secondly, the cameras need to be synced together, so that each camera is capturing the same frame at the same time. Finally, along with a reasonably powerful PC to hold the data, you need some specialized software. Currently, DepthKit, Evercoast and Jaunt all offer support for volumetric capture with Intel RealSense D400 series depth cameras. Each has their own different setup requirements and output formats, and the choice between them depends on your target use case and project goals.

Courtesy Evercoast Studios

Courtesy Evercoast Studios

The other benefit of using depth cameras to create Volumetric film content is that the system is scalable and portable – a capture dome like Intel Studios is inherently limited to the location it is built, and to a fairly specific configuration of cameras, whereas a depth camera solution can place the cameras anywhere they are needed to cover specific holes in the data, allowing for more configurations, recording of live events and location specific events.

Ultimately, we are just beginning to see the advent of volumetrically captured content, but with the advent of more interactive content where users can choose their own experience, volumetric capture would enable viewers to choose not only which narrative path they travel down, but also what point of view they watch the story from. Imagine a mystery story where your impression of the characters and content are affected by the angle you chose to view the scene from, not only the choices you make within the story. New and unique ways of telling and experiencing stories are just around the corner, powered by Intel technologies.

> Learn more about Intel RealSense Depth Cameras

> Buy a depth camera online

Subscribe here to get blog and news updates.

You may also be interested in

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens

A huge variety of package shapes, sizes, weights and colors pass through today’s e-commerce fulfilment or warehouse distribution centers. Using