What does depth bring to Machine Learning?

What is Machine Learning?

Before we start any discussion about Machine Learning, we should discuss what it is. Machine learning makes use of algorithms and statistical models to make predictions and guesses about data. It’s sometimes also referred to as “artificial intelligence” although machine learning is generally about performing a specific task or function, rather than the more general idea of artificial intelligence that can solve many different problems.

For a simple example, let’s look at this pretty famous example in this clip from HBO’s Silicon Valley–

The app can successfully identify “Hotdog” or “not Hotdog.” To create an app like this, the process would involve taking lots of pictures of hotdogs, labeling them as such, and then some pictures of things that aren’t hotdogs, and labeling them as non-hotdogs. A machine learning algorithm powered by this kind of training data should be able to look at a photo it hasn’t seen before, and correctly identify whether or not this photo is of a hotdog. The key feature of this kind of machine learning solution is sample or training data which is correctly labeled, allowing predictive analysis of new, similar data.

Hotdog |

Not Hotdog |

Of course, identifying a hotdog in this way is relatively easy. But what about some interesting edge cases?

How to tell the difference between a car and a hotdog?

https://commons.wikimedia.org/wiki/File:Oscar_meyer_weinermobile.jpg

The Wienermobile in this photo, could potentially be identified as a hotdog. Is it? It has many visual features that make it look like a hotdog, but if you’re using the app to identify something you can eat, it’s going to be a potential failure. As humans, we can look at an image like this and clearly pick out “car” features like the windows, tail lights and wheels, as well as objects in the environment which give us a sense of scale, like the road, lamp-post and buildings.

A machine learning algorithm however, doesn’t have that same context. Unless that algorithm has specifically been trained to include other objects, it’s very challenging to distinguish this from a regular hotdog. Of course, this isn’t exactly a mission-critical application, but as machine learning solutions become important for things like self-driving cars, or other high value and high risk applications, being able to differentiate the edge cases becomes extremely important.

Depth is one method that could solve this problem. If instead of two dimensional images, we had depth images of the hotdog and the wienermobile, that additional piece of context information we needed becomes clearer – if we have trained our network on regular hotdogs from cocktail-size to foot-longs, a 20-30 foot vehicle would successfully return a “not hotdog” result. Depth cameras give you the key piece of information that says this ‘hotdoglike’ item is a certain distance from the camera, so from there, some pretty simple math gives you the approximate size of the object. Since our network is trained on standard size hotdogs, our model would now accurately return the wienermobile as a non-hotdog object.

Is a hotdog in the dark, still a hotdog?

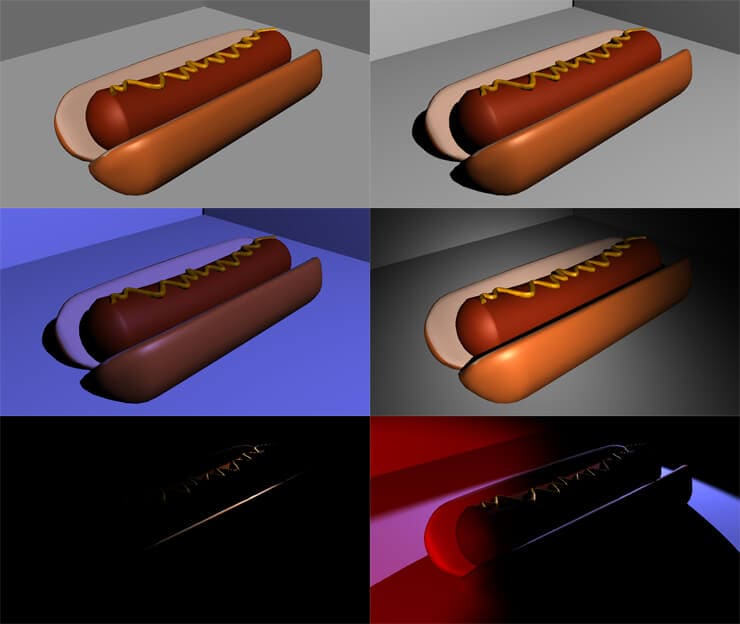

Another problem space for our hotdog/notdog app is that a 2D based system requires us to train the network on many different lighting conditions for our hotdog. Take a look at the following images.

These are 3D generated images of hotdogs with a variety of lighting conditions. Each hotdog is identical, and in each image the position and camera angle is the same, the only difference between the images is the position, shape and color of lights. For most of these images, a human can correctly identify that there’s a hotdog in the picture, with the possible exception of the dark, backlit hotdogs. With the wienermobile, we had the problem of a false positive – a hotdog result where there was no hotdog. In these cases, it’s possible that the system will fail to identify a hotdog even when it’s present.

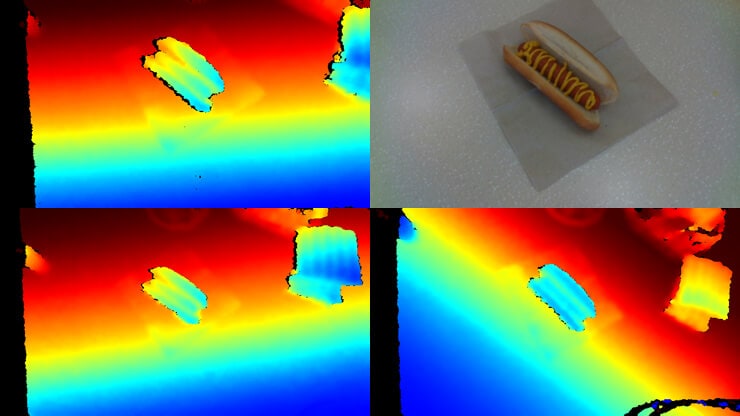

If we return to the idea of our system using a depth camera instead of a 2D camera, lighting conditions don’t matter. Active stereo depth cameras like the Intel® RealSense™ D400 series depth cameras can operate in any lighting condition but give a consistent output. The images below show a hotdog photographed with the Intel RealSense Depth Camera D435. While this camera includes an RGB sensor, it also captures the 3D depth data – which won’t change regardless of the lighting conditions. In the images below, you can see a representation of distance from camera displayed as a color gradient. The hotdog clearly stands out from the background surface, so our trained application would easily be able to classify every hotdog successfully.

Other reasons to use depth

The two reasons stated above – scale and lighting invariance are probably the primary reasons where depth makes sense, but there are other situations where depth can be significantly better than 2D images – for example, if you are training a system to recognize letters from the American sign language alphabet, certain hand and finger positions are much easier to distinguish and classify from a depth image. Being able to segment something out from its background more easily can also be crucial in some use cases. Skeletal tracking, where humans are wearing a variety of clothing that can’t be predicted, can be made more robust simply by having the additional information a depth camera provides.

The downside of machine learning with depth

Today, the biggest hurdle when using depth with your machine learning project is simple – there are fewer depth cameras out there than there are 2D cameras, and a significantly smaller number of depth images when compared with the vast numbers of 2D images available on the internet. Existing machine learning projects have been trained primarily on these 2D images, so the training and data collection for any depth based machine learning project will be the biggest hurdle, but for many projects, the benefits will outweigh this down side.

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens