The basics of stereo depth vision

By Sergey Dorodnicov, Intel® RealSense™ SDK Manager

In this post, we’ll cover the basics of stereoscopic vision, including block-matching, calibration and rectification, depth from stereo using opencv, passive vs. active stereo, and relation to structured light.

Why Depth?

Regular consumer web-cams offer streams of RGB data within the visible spectrum that can be used for object recognition and tracking, as well as basic scene understanding.

- Identifying the exact dimensions of physical objects is still a challenge, even using machine learning. This is where depth cameras can help.

Using a depth camera, you can add a brand‑new channel of information, with distance to every pixel. This new channel is used just like the others — for training and image processing, but also for measurement and scene reconstruction.

Stereoscopic Vision

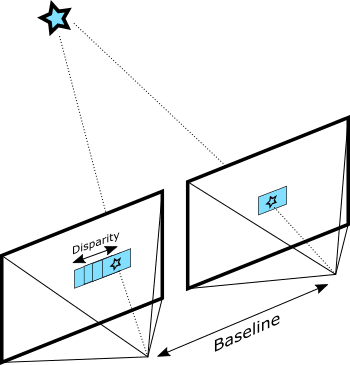

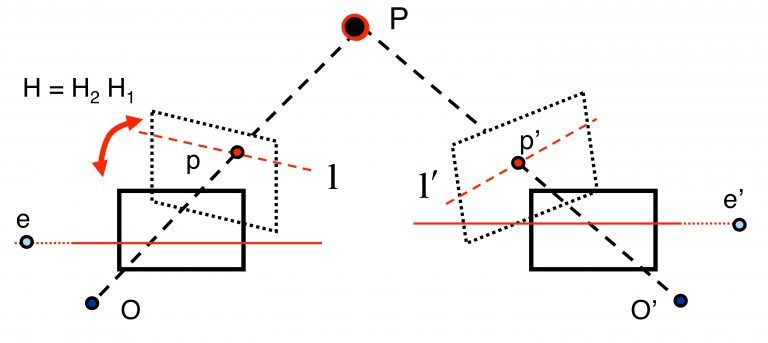

Depth from Stereo is a classic computer vision algorithm inspired by the human binocular vision system. It relies on two parallel view‑ports and calculates depth by estimating disparities between matching key‑points in the left and right images:

Depth from Stereo algorithm finds disparity by matching blocks in left and right images

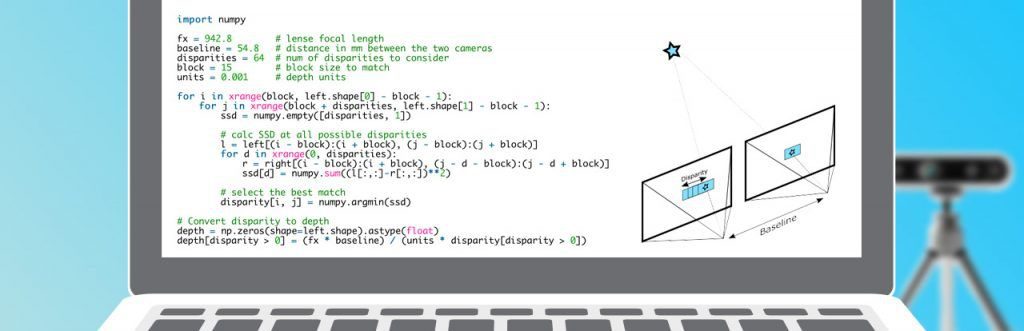

Most naive implementation of this idea is the SSD (Sum of Squared Differences) block‑matching algorithm:

import numpy

fx = 942.8 # lense focal length

baseline = 54.8 # distance in mm between the two cameras

disparities = 64 # num of disparities to consider

block = 15 # block size to match

units = 0.001 # depth units

for i in xrange(block, left.shape[0] - block - 1):

for j in xrange(block + disparities, left.shape[1] - block - 1):

ssd = numpy.empty([disparities, 1])

# calc SSD at all possible disparities

l = left[(i - block):(i + block), (j - block):(j + block)]

for d in xrange(0, disparities):

r = right[(i - block):(i + block), (j - d - block):(j - d + block)]

ssd[d] = numpy.sum((l[:,:]-r[:,:])**2)

# select the best match

disparity[i, j] = numpy.argmin(ssd)

# Convert disparity to depth

depth = np.zeros(shape=left.shape).astype(float)

depth[disparity > 0] = (fx * baseline) / (units * disparity[disparity > 0])

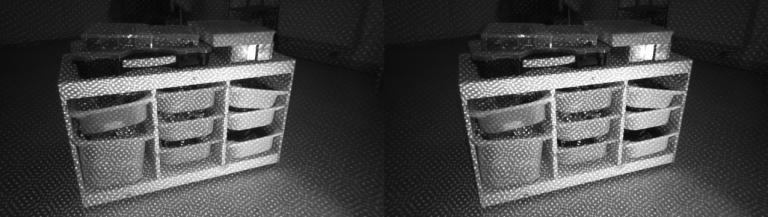

Rectified image pair used as input to the algorithm

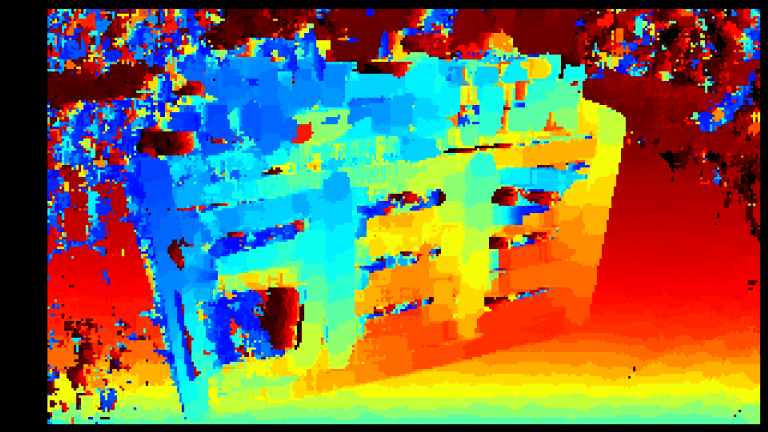

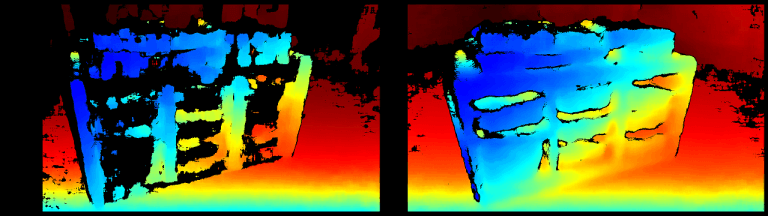

Depth map produced by the naive SSD block-matching implementation

Point-cloud reconstructed using SSD block-matching

There are several challenges that any actual product has to overcome:

- Ensuring that the images are in fact coming from two parallel views

- Filtering out bad pixels where matching failed due to occlusion

- Expanding the range of generated disparities from fixed set of integers to achieve sub‑pixel accuracy

Calibration and Rectification

Having two exactly parallel view‑ports is challenging. While it is possible to generalize the algorithm to any two calibrated cameras (by matching along epipolar lines), the more common approach is image rectification. During this step left and right images are re‑projected to a common virtual plane:

Image Rectification illustrated (Source: Wikipedia*)

Software Stereo

OpenCV library has everything you need to get started with depth:

- calibrateCamera can be used to generate extrinsic calibration between any two arbitrary view‑ports

- stereorectify will help you rectify the two images prior to depth generation

- stereobm and stereosgbm can be used for disparity calculation

- reprojectimageto3d to project disparity image to 3D space

Point-cloud generated using opencv stereobm algorithm

import numpy

import cv2

fx = 942.8 # lense focal length

baseline = 54.8 # distance in mm between the two cameras

disparities = 128 # num of disparities to consider

block = 31 # block size to match

units = 0.001 # depth units

sbm = cv2.StereoBM_create(numDisparities=disparities,

blockSize=block)

disparity = sbm.compute(left, right)

depth = np.zeros(shape=left.shape).astype(float)

depth[disparity > 0] = (fx * baseline) / (units * disparity[disparity > 0])

The average running time of stereobm on an Intel(R) Core(TM) i5‑6600K CPU is around 110 ms offering effective 9 frames‑per‑second (FPS).

- Get the full source code here

Passive vs Active Stereo

The quality of the results you’ll get with this algorithm depends primarily on the density of visually distinguishable points (features) for the algorithm to match. Any source of texture — natural or artificial — will significantly improve the accuracy.

That’s why it’s extremely useful to have an optional texture projector that can usually add details outside of the visible spectrum. In addition, you can use this projector as an artificial source of light for nighttime or dark situations.

Input images illuminated with texture projector

Left: opencv stereobm without projector. Right: stereobm with projector.

Structured‑Light Approach

Structured-Light is an alternative approach to depth from stereo. It relies on recognizing a specific projected pattern in a single image.

- For those interested in a structured‑light solution, there’s the Intel RealSense SR300 camera.

Structured‑light solutions do offer certain benefits; however, they are fragile. Any external interference, from the sun or another structured‑light device, will prevent users from achieving any depth.

In addition, because a laser projector must illuminate the entire scene, power consumption goes up with range, which often requires a dedicated power source.

Depth from stereo on the other hand, only benefits from multi-camera setup and can be used with or without projector.

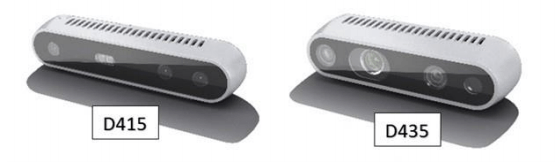

Intel RealSense D400 series depth cameras

Intel RealSense D400 cameras:

- Come fully calibrated, producing hardware‑rectified pairs of images

- Perform all depth calculations at up to 90 FPS

- Offer sub‑pixel accuracy and high fill-rate

- Provide an on‑board texture projector for tough lighting conditions

- Run on standard USB 5V power-source, drawing about 1‑1.5 W

- Designed from the ground up to:

- Address conditions critical to robotic/drone developers

- Overcome the limitations of structured light

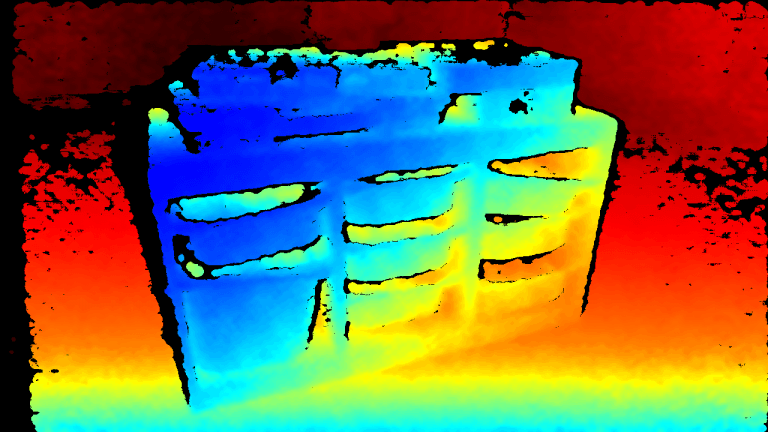

Depth-map using Intel RealSense D415 stereo camera

Point-cloud using Intel RealSense D415 stereo camera

Summary

Just like opencv, Intel RealSense technology offers open‑source and cross‑platform set of APIs for getting depth data.

Check out these resources for more info:

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens