Intel RealSense Technology at IROS 2019

From November 4-8 2019, the International Conference on Intelligent Robots and Systems (IROS) took place in Macau, China. Co-sponsored by the IEEE, the IEEE Robotics and Automation Society (RAS), the IEEE Industrial Electronics Society (IES), the Robotics Society of Japan (RSJ), the Society of Instruments and Control Engineers (SICE), and the New Technology Foundation (NTF), IROS is the flagship international conference in robotics and intelligent systems.

The Intel RealSense Team at IROS

The Intel RealSense Team at IROS

The Intel® RealSense™ Technology team were there, exhibiting a variety of robotics projects and solutions. The Intel RealSense booth was not the only place you could find Intel RealSense technology at the show however – a number of participants in the challenges were using Intel RealSense devices, as were some of the other exhibitors in the show. Here’s a quick recap of the places Intel RealSense Technology was seen at IROS.

Challenges and Competitions

The Lifelong Robotic Vision Challenge

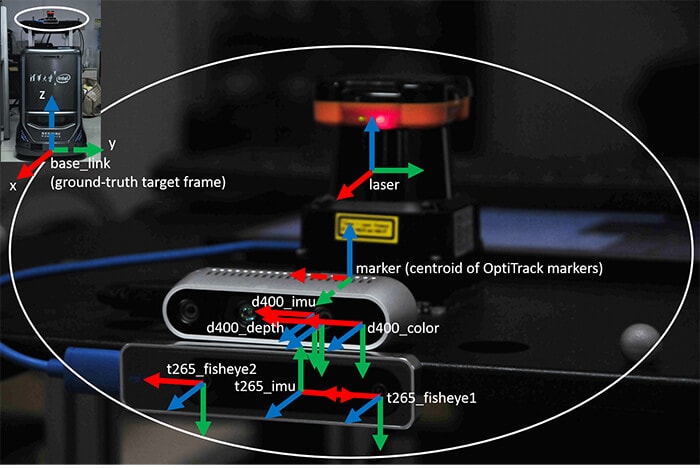

Intel Labs China helped to organize two related challenges that culminated in a workshop at the IROS conference, with the goal of improving state of the art computer vision, by providing rich training/testing data and context information, with realistic environments to help lead to the development of more practical and scalable learning methods. The collected datasets for the challenge utilized Intel RealSense depth camera D435i and Intel RealSense tracking camera T265, mounted to grounded robots to provide aligned color and depth images from the D435i, and stereo fisheye and aligned IMU data from the T265. The robots were actively recording videos of targeted objects under multiple lighting conditions, occlusions, camera-object distances/angles, and context information (clutter).

For the Lifelong Object Recognition challenge, in the datasets, common challenges that would be faced by a robot are included. For example, any robot will have to face changing lighting conditions from day to night and season to season, as well as with artificial lighting on and off. In the data set, the collection included repeating conditions with weak, normal and strong lighting. Similarly, when an object is partially occluded from a robot’s sensors, it can be harder to identify the object, which is why including occlusion is necessary for training. Varying object size, camera angles and distances also makes the challenge more difficult for the participants. Clutter, or including many different objects within the range of the target object can sometimes also interfere with classification tasks.

This challenge was intended to explore how to leverage the knowledge learned from previous tasks to generalize to a new task effectively, and also how to efficiently memorize previously learned tasks. In other words, the goal was to make the robot behave like a human, with knowledge transfer, association and combination capabilities.

The goal of the Lifelong SLAM Challenge was to study and improve the robustness of relocalization and mapping, especially in conditions where objects and features in an environment may move while out of sight of the cameras or sensors. In most real world robotic scenarios, robots should be able to operate long term in environments which may change daily and repeatedly. The dataset for this challenge was collected in homes, offices and other indoor places, collected multiple times in each place, in order to get scene changes in real life. Ground-truth trajectories were established using a motion capture system and other auxiliary means, with benchmarking tools to allow the evaluation of competing SLAM algorithms.

While the challenge is over, the data sets for both challenges are available here.

Robotic Grasping and Manipulation Competition

For this competition, there were three tracks; service robot, manufacturing and logistics. For each one, a complete robotic system had to be used, and perform all the tasks autonomously without any human input.

In the service robotics track, robots had to perform specific tasks such as using a spoon to pick up peas, where the table was set with an empty plate, a bowl half full of peas and a cup with a spoon in it. All of these were positioned randomly on the table, so each robot had to evaluate the location of the items, grasp the spoon from the cup, pick up peas from the bowl and transfer them to the plate, repeating as many times as necessary. Each pea was worth two points (up to 10 points total). During the competition, the robot must not knock over any of the objects, but it could drop peas outside of the plate without being penalized for it.

Other tasks in the challenge included transferring a cup placed on a saucer, using a spoon to stir water in a cup, shaking a salt shaker over a plate, inserting a plug into a socket, placing puzzle pieces on a peg board, putting a straw into a to-go cup lid, pouring water into a cup, or using scissors to cut a piece of paper according to the pattern printed on the paper.

In the logistics track, the robots had to pick 100 items from a container, one at a time, scan the barcode on the item, and sort it into one of two bins based on the barcode on the item. There were 100 different items placed randomly into bags of 10 items. While the teams saw pictures of the items before the event, how the items should be sorted was not available to them prior to the competition day. Teams were scored based on the difficulty of items that they successfully sorted into each bin without breaking or dropping outside the bin.

For the manufacturing track, robots had to assemble and disassemble an assembly task board with a variety of components. While the general components were given to the attendees ahead of the event, the actual competition used a new layout and new surprise components, in order to encourage the use of machine vision in the robotic solutions.

In all of the above tasks, many participants were using Intel RealSense technology to aid in their solutions – whether they were using the tracking cameras, depth cameras or both.

Autonomous Drone Racing challenge

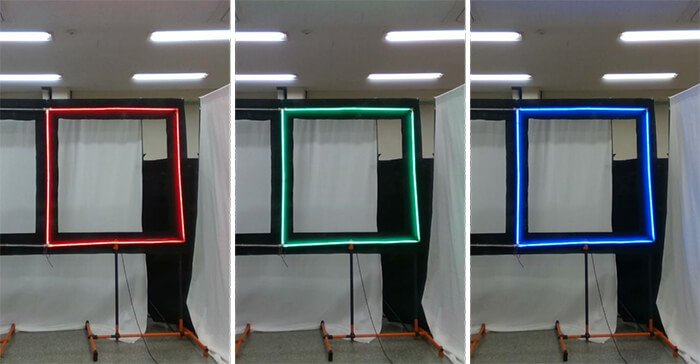

There are many different and practical uses for drones capable of traveling autonomously at high speed – for example in search and rescue operations for disaster. This competition tested the ability of each team to build a drone which could autonomously travel through the course as quickly as possible. As part of the course, there were lighted gates that were placed randomly (and which would move after each attempt), which the drone would have to navigate its way through, in the correct order.

Examples of the highlighted gates.

Examples of the highlighted gates.

The winning team from the Autonomous Drone Racing challenge. Their drone used the Intel® RealSense™ Tracking Camera T265.

The winning team from the Autonomous Drone Racing challenge. Their drone used the Intel® RealSense™ Tracking Camera T265.

Other Intel RealSense sightings at the show

A few photos and highlights of devices using Intel RealSense Technology at IROS – by no means exhaustive.

ANYmal C – https://www.anybotics.com/anymal-legged-robot/

ANYmal C – https://www.anybotics.com/anymal-legged-robot/

Sunspeed Robotics – http://www.amrobots.net/ with Adlink ROS controller – https://www.adlinktech.com/en/index.aspx

Sunspeed Robotics – http://www.amrobots.net/ with Adlink ROS controller – https://www.adlinktech.com/en/index.aspx

Iquotient Robotics – http://www.iquotient-robotics.com/

Iquotient Robotics – http://www.iquotient-robotics.com/

Unitree Aliengo – http://www.unitree.cc/

Unitree Aliengo – http://www.unitree.cc/

Uvify research drones – https://www.uvify.com/

Uvify research drones – https://www.uvify.com/

Kuka – https://www.kuka.com/en-de and ASTRI – https://www.astri.org/

Kuka – https://www.kuka.com/en-de and ASTRI – https://www.astri.org/

RT Corporation Sciurus17 – https://www.rt-net.jp/?lang=en

RT Corporation Sciurus17 – https://www.rt-net.jp/?lang=en

Meituan Dianping delivery robot – https://about.meituan.com/en

Meituan Dianping delivery robot – https://about.meituan.com/en

Deep Robotics – http://deeprobotics.cn/

Deep Robotics – http://deeprobotics.cn/

Subscribe here to get blog and news updates.

You may also be interested in

“Intel RealSense acts as the eyes of the system, feeding real-world data to the AI brain that powers the MR

In a three-dimensional world, we still spend much of our time creating and consuming two-dimensional content. Most of the screens